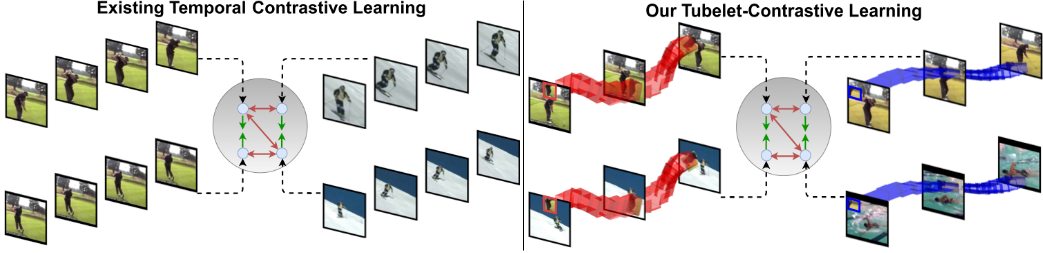

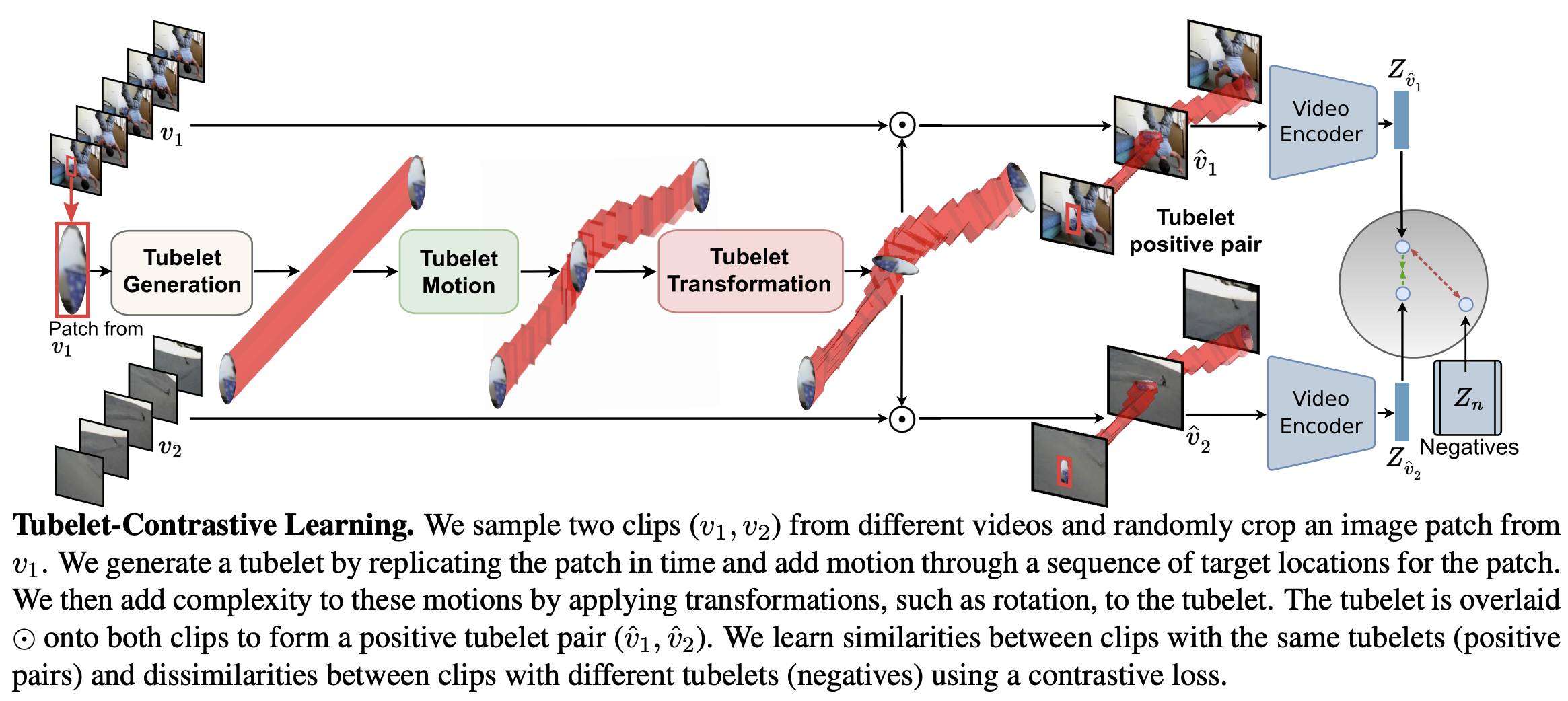

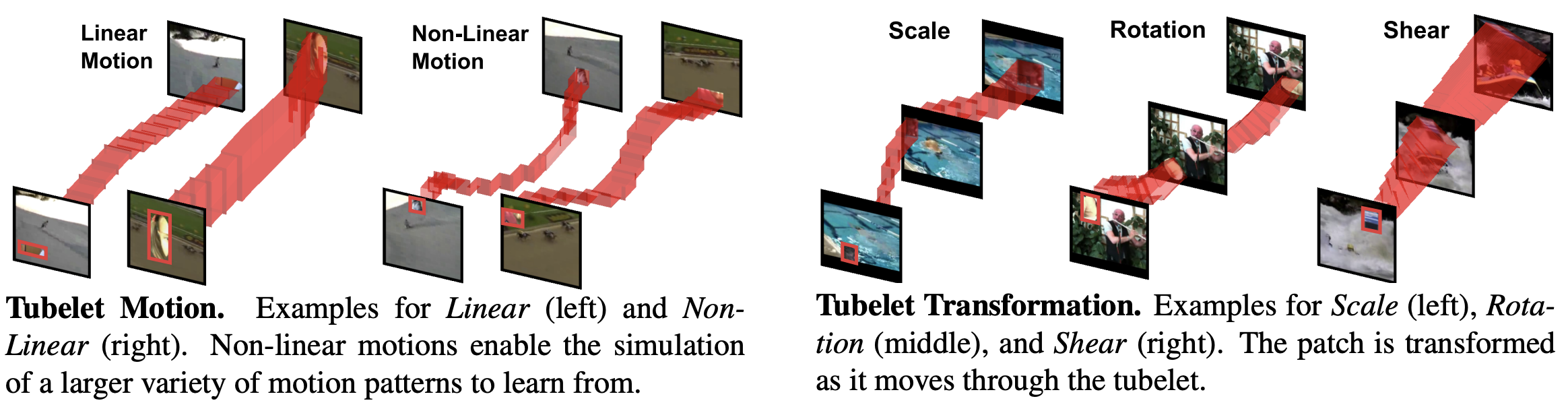

We propose tubeletcontrastive learning to reduce the spatial focus of video representations and instead learn similarities between spatiotemporal tubelet dynamics. We encourage our learned representation to be motion-focused by simulating a variety of tubelet motions. To further improve the data efficiency and generalizability of our method, we add complexity and variety to the motions through tubelet transformations. Figures below show an overview of our proposed approach and tubelet motion and transformations.

We summarize the key observations from our experiments below.

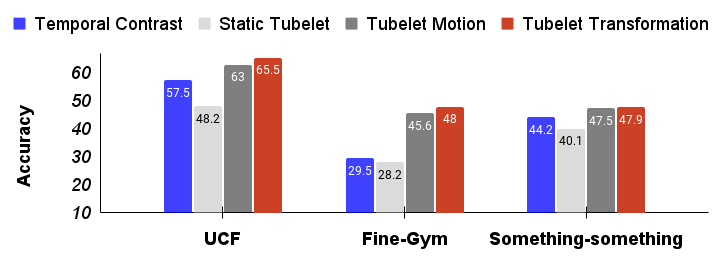

We demonstrate the impact of each component in our tubelet-contrastive learning. It is clear that the motion within tubelets is critical to our model’s success as contrasting static tubelets obtained from our tubelet generation actually decreases the performance from the temporal contrast baseline. When tubelet motion is added, performance improves considerably, Finally, adding more motion types via tubelet transformations further improves the video representation quality. This highlights the importance of including a variety of motions beyond what is present in the pretraining data to learn generalizable video representations.

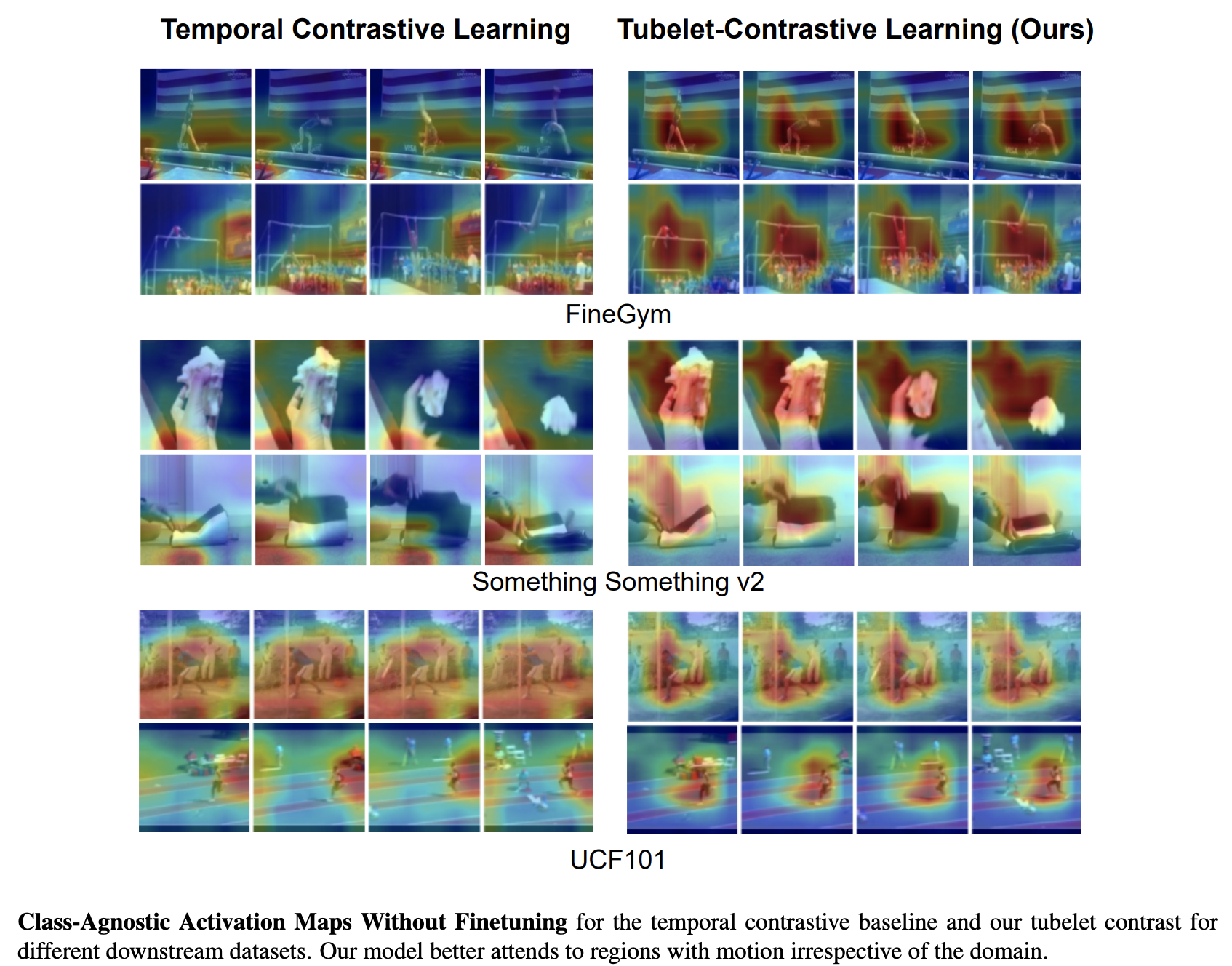

To understand what our model learns, we visualize the class agnostic activation maps of various downsteram datasets without any finetuning for the temporal contrastive baseline and our approach. Without previously seeing any of the data, we observe that our approach attends regions with motion while the temporal contrastive baseline mostly attends to the background.

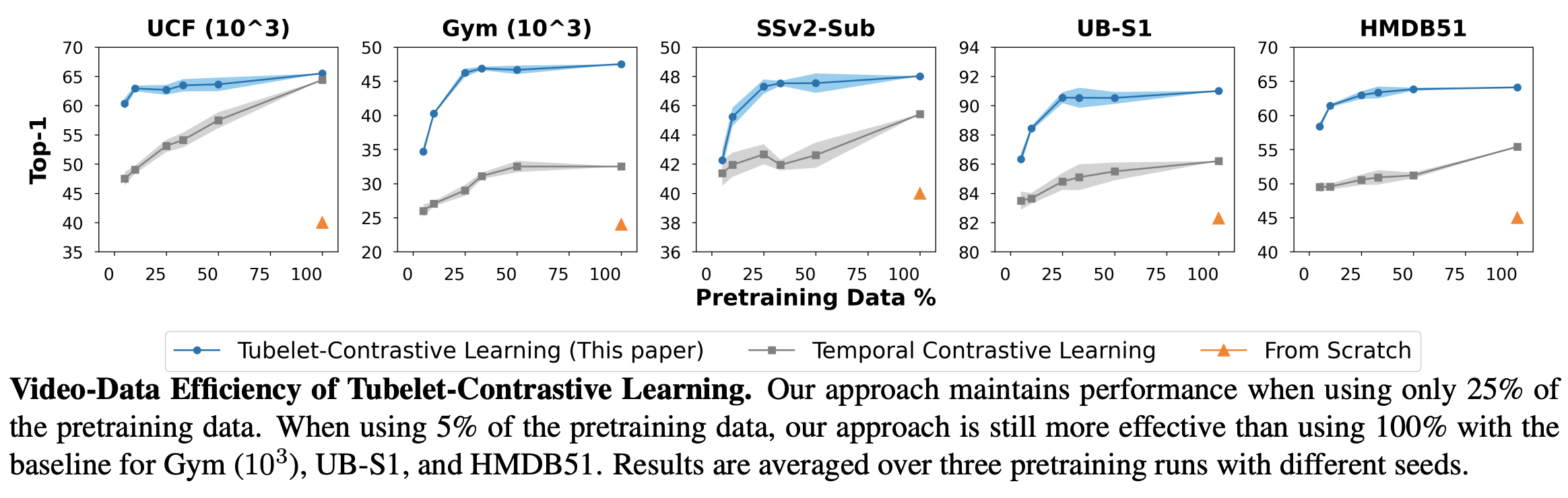

To demonstrate our method’s data efficiency, we pretrain using subsets of the Kinetics-400 training data. In particular, we sample 5%, 10%, 25%, 33% and 50% of the Kinetics-400 training set with three different seeds and use this to pretrain our model and the temporal contrastive baseline. We compare the effectiveness of these representations after finetuning on 5 different donwstream setups. On all downstream setups, our method maintains similar performance when reducing the pretraining data to just 25%, while the temporal contrastive baseline performance decreases significantly.

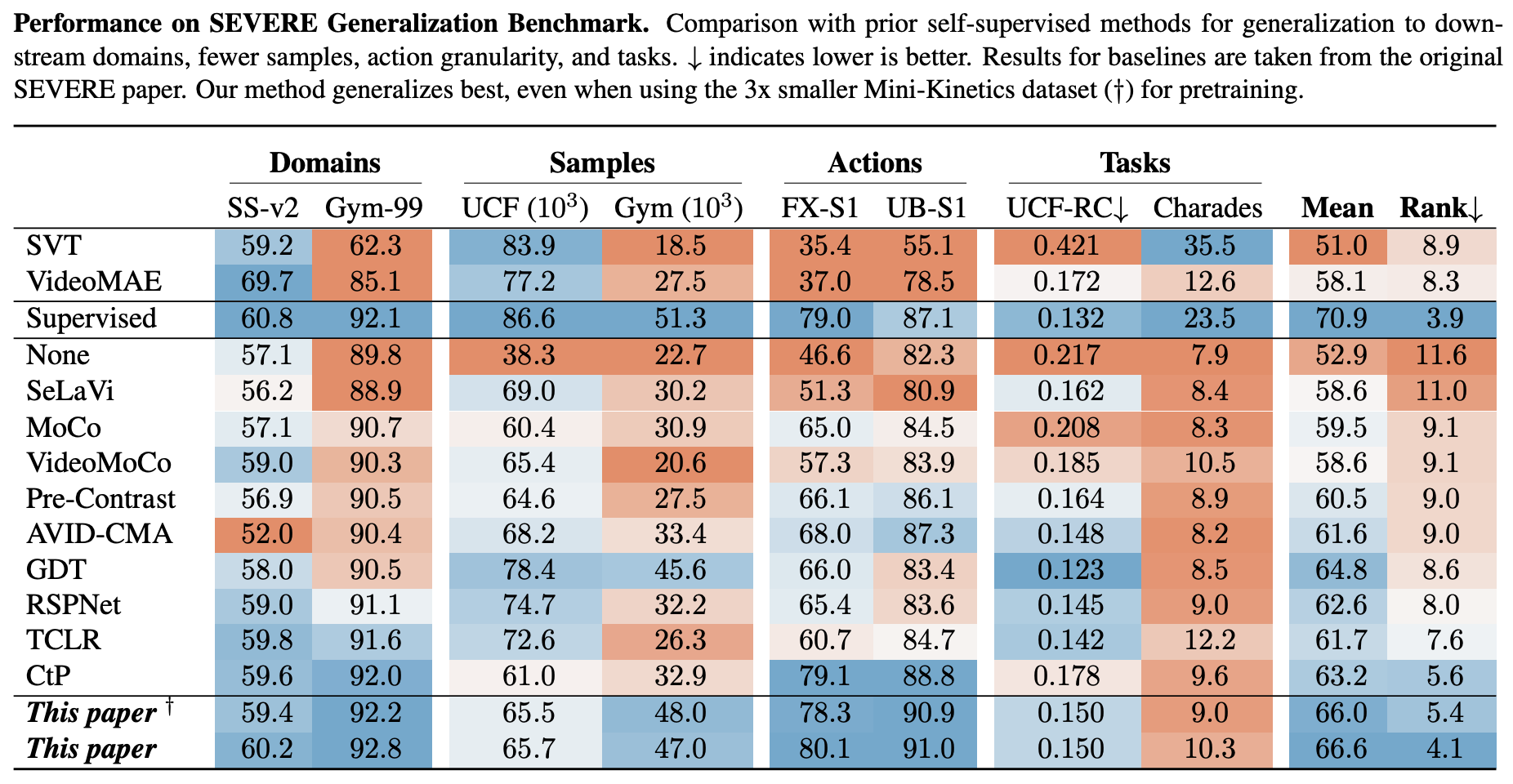

We compare to prior works on the challenging SEVERE benchmark , which evaluates video representations for generalizability in domain shift, sample efficiency, action granularity, and task shift. We compare the mean and the average rank across all generalizability factors. Our method has the best mean performance (66.5) and achieves the best average rank (4.1). When pretraining with the 3x smaller Mini-Kinetics our approach still achieves impressive results. We conclude our method improves the generalizability of video self-supervised representations across these four downstream factors while being data-efficient.

@inproceedings{thoker2023tubelet,

author = {Thoker, Fida Mohammad and Doughty, Hazel and Snoek, Cees},

title = {Tubelet-Contrastive Self-Supervision for Video-Efficient Generalization},

journal = {ICCV},

year = {2023},

}